The WHO uses evidence from field trials (Phase III) and pilot interventions (Phase IV) to make policy recommendations. The quality of evidence produced by these trials is rated using the Grading of Recommendations Assessment Development and Evaluation (GRADE) methodology. Unfortunately, vector control interventions are frequently lacking high quality research to support their recommendation in disease control programmes, and assessments of vector-borne interventions that are not rigorous and evidence-based can waste a considerable amount of money, time, and energy. It is crucial that future studies are designed to provide high-quality data.

1. Study design

1.1 Randomised and cluster-randomised studies

To evaluate the efficacy of a protective intervention, randomised controlled trials (RCTs) are recommended above all other designs. In RCTs, individuals or clusters (such as households, villages, or districts) are allocated at random to receive the intervention or a control, and the two groups are then followed up. These designs therefore benefit most importantly from a low risk of selection bias. RCTs are discussed in full below.

1.2. Other randomised designs

Randomised controlled before-and-after studies

In controlled before-and-after studies, data are collected on outcome measures at a single time point both before and after the intervention is implemented. At the same time points, data are also collected from a control group that doesn’t receive the intervention.

Randomised controlled time series and interrupted time series studies

Where full randomisation is not possible, controlled time series and controlled interrupted time series (ITS) studies offer the ability to estimate causal effects using observational approaches. In controlled time series studies, outcome measures are taken on several time points following implementation of the intervention, both in the intervention and in the control group. In ITS designs, data are collected before as well as following the introduction of the intervention, again at several time points in the intervention and control groups. These designs minimize the weaknesses of single measurements. However, time series methods are not appropriate when the intervention is introduced at more than one time point or there are there are external time varying effects such as seasonality [1].

Randomised cross-over studies

In crossover studies, individuals or clusters will receive the intervention or control for a period of time, then switch to receive a control or intervention. There is usually a period of time between the first and second parts of the study, but where the intervention is likely to have long-term effects, such as environmental management, such a design would not be suitable.

Randomised step-wedge designs

Step-wedge studies involve the roll-out of an intervention to different clusters. The roll-out occurs over stages, usually at random, with sequential cross-over from control to intervention, until all clusters have received the intervention. As it may be difficult to implement complex interventions en bloc, the step-wedge design offers a fair way for the roll-out to be determine and provide randomised evidence of effectiveness [2].

1.4. Observational studies

In observational studies investigators observe subjects and measure variables of interest, but have no role in assigning the intervention to the participants. Observational studies can take the form of case-control, cohort, or cross-sectional studies, and may provide evidence of the efficacy of vector control interventions. For example, McBride et al. [3] used an observational cross-sectional study to assess determinants of dengue infection in northern Australia by collecting data from a population at a specific point in time and analysing the data for both the prevalence of the disease and use of the protective interventions. An observational case-control study in Taiwan compared the use of mosquito nets, indoor residual spraying, indoor screens and other interventions between patients with dengue and control individuals without dengue [4]. However, the data collected from such observational studies may be subject to confounding and other biases, so the evidence they provide is considered to be weaker than that from randomised experimental studies.

1.5 Study design recommendation

RCTs are the gold standard study design for vector control intervention. Randomised controlled before-and-after studies, randomised time series, randomised ITS, and randomised step–wedge studies also provide high quality evidence. A second tier of evidence is provided by non-randomised controlled before-and-after studies, non-randomised ITS, and non-randomised step–wedge studies, followed by observational studies. Non-randomised controlled trials, non-randomised controlled time-series, studies without a control group, or those using historical control groups, are not recommended [5].

2. Study Duration

The duration of a study depends on the study design and the context of pathogen transmission. For randomised control trials, at least one transmission season should be used for pre-intervention entomological data if sampling sites are non-randomly selected, then at least one transmission season, preferably two, should be used post intervention. For dengue, three months is considered the minimum period required to demonstrate a sustained impact on the vector population and/or impact on disease transmission [6]. Infection of some disease like malaria can last for one year or more, and periods of several years may be required to evaluate long-term effects of interventions on malaria prevalence [7].

By collecting entomological data over sustained periods it becomes possible to assess whether the effects of an intervention are diminishing, and therefore how often the intervention will need to be reapplied or replaced. Further, longer follow-up periods offer the opportunity for repeat sampling to be conducted. This is important because vector density can vary considerably at different times due to environmental factors, so a reliable measure of the effect of an intervention may not be seen without a sufficient sampling.

3. Randomised Controlled Trials

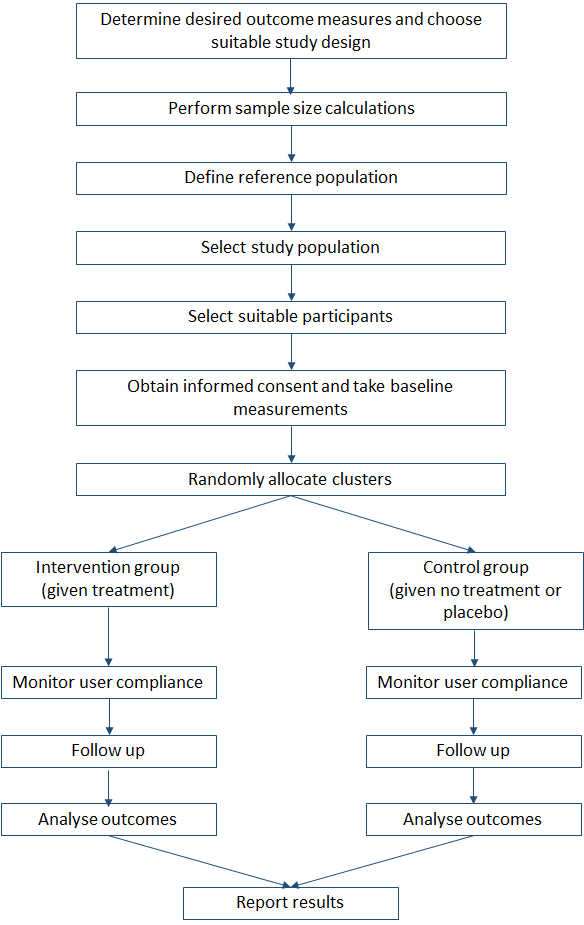

Randomised controlled trials are the most rigorous way of determining whether there is a cause-effect relation between an intervention and outcome. Important features of RCTs are that interventions are randomly allocated, the groups are treated identically except for the experimental intervention, and the analysis is focused on estimating the size of the difference in predefined outcomes between groups. Figure 1 shows the flow of activities that are involved in designing the trial through to outcome analysis and reporting.

Figure 1. Randomised controlled trial flow chart

3.1. Importance of randomisation

Randomisation is important because it can minimise selection and other types of bias. Vector control tools will often work at the community level (for example, long lasting insecticidal nets can help protect everyone in the area, not just those sleeping under the nets), and in these cases it is important that randomisation takes place at the appropriate group or cluster level.

Studies involving interventions at the individual level should also be randomised, but they can have problems of contamination: if different individuals in a community are given different interventions, such as insect repellents or repellent clothing, any sharing of these products with other individuals would interfere with the study results. It is therefore often preferable to allocate the intervention to individuals in a cluster, then randomise the clusters.

It should be noted that while RCTs are rated as high-quality evidence by the GRADE methodology, studies can be up- or downgraded based on several factors: RCTs can be downgraded if there is risk of bias, inconsistency, or imprecision for example, and a non-RCT could be upgraded if a large effect size is observed. Nonetheless, non-randomised control trials are not recommended as selection bias is likely to be high and there are commonly no pre-intervention data to assess the comparability of test groups. Observational studies, such as case-control or cross-sectional studies, provide relatively weak evidence to support the effect of vector control interventions and rank below non-RCTs.

3.2. Adherence to interventions

If an intervention, such as the use of repellents or nets within the home, fails to have an effect it is important to know whether this results from low user compliance or from the tools not being effective. Ensuring high coverage and monitoring user adherence to the intervention are therefore vital. This may be achieved through education, communication, supervision, and random spot checks[5].

3.3. Contamination effects

Contamination or spillover effects occur when there is movement of vectors or people between study arms. This could interfere with the study by diluting the impact of the intervention, because people within a treatment cluster may spend time in a control cluster and increase their risk of infection. Conversely, the impact of an intervention could be exaggerated if an intervention drives vectors from the treatment cluster to the control cluster, thereby increasing infection rates in the control. By ensuring that clusters are well separated or have a sufficient buffer zone, this effect can be minimised. The use of travel histories can also be helpful in these cases to minimise this type of bias. Further, by limiting enrolment numbers within an area it may be possible avoid problems associated with repellents diverting mosquitoes to other individuals.

Where crossover trials are designed, in which individuals or clusters receive the intervention or control treatment for a set period of time then switch over to receive the control or intervention, it is important to avoid any persistent effects that an intervention may have. If the intervention is an insecticide or involves a change to the habitat this type of design might not be suitable due to the long-term impact they may have.

3.4. Sample size

All trials should have a sample size calculation to ensure they are sufficiently powered to detect the intervention effect. If parameters such as disease incidence in the control group are unknown, it will be necessary to estimate these using baseline surveys before the start of a trial. Additionally, follow-up periods need to be carefully chosen based on the length of the transmission season and the ability to detect new infection within the follow up period. Follow-up periods should also take into consideration changes in user compliance: early on in a study, individuals may show strong compliance but this is likely to fall as the trial continues.

3.5. Study outcomes

Use of the appropriate epidemiological outcome of a study is extremely important. Entomologists may focus on entomological outcomes such as reduction in mosquito numbers, but these end points are not always good predictors of epidemiological outcomes. Suitable outcomes for assessing the impact on disease transmission or human health include incidence of disease, disease-specific mortality, or the prevalence of infection in blood samples.

Entomological monitoring should, however, still be undertaken and sites should be chosen randomly. Historically, entomological monitoring takes place in sites already known or suspected to have high densities of vectors, and this can introduce sampling bias, so random selection is recommended. Further, detection bias can be a problem in vector control studies, particularly in trials where staff distributing the intervention are also collecting the outcome data. Ensuring all staff are blinded to the randomisation, as far as possible, can address this but for some interventions, such as using screens in homes, it is not always possible.

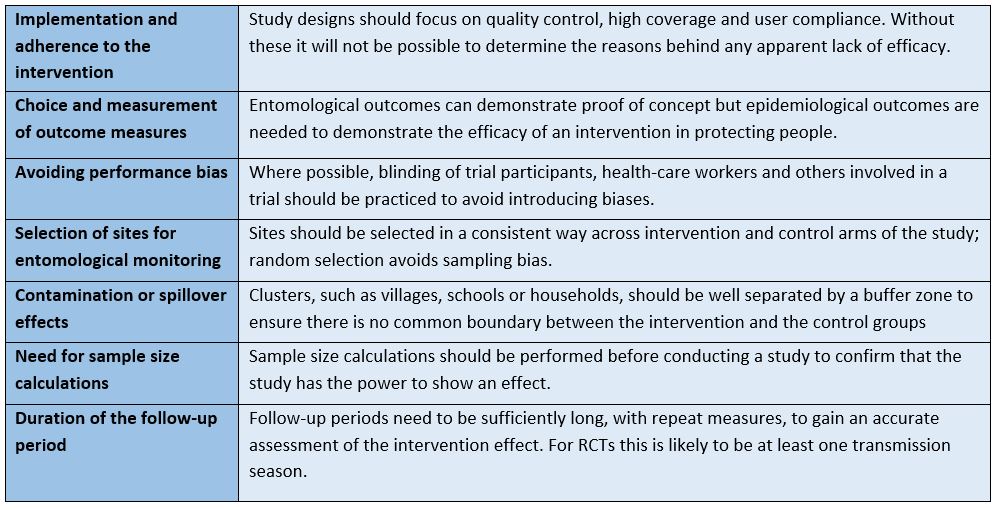

Table 1. Summary of vector control trial recommendations.

References

-

Kontopantelis E, Doran T, Springate DA, Buchan I, Reeves D. Regression based quasi-experimental approach when randomisation is not an option: interrupted time series analysis. BMJ. 2015 Jun 9;350:h2750.

https://www.bmj.com/content/350/bmj.h2750.short

-

Hemming K, Haines TP, Chilton PJ, Girling AJ, Lilford RJ. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. Bmj. 2015 Feb 6;350:h391.

https://www.bmj.com/content/350/bmj.h391.long

-

McBride WJ, Mullner H, Muller R, Labrooy J, Wronski I. Determinants of dengue 2 infection among residents of Charters Towers, Queensland, Australia. American journal of epidemiology. 1998 Dec 1;148(11):1111-6.

https://academic.oup.com/aje/article-pdf/148/11/1111/285723/148-11-1111.pdf

-

Ko YC, Chen MJ, Yeh SM. The predisposing and protective factors against dengue virus transmission by mosquito vector. American Journal of Epidemiology. 1992 Jul 15;136(2):214-20.

https://academic.oup.com/aje/article-pdf/136/2/214/305117/136-2-214.pdf

-

Wilson AL, Boelaert M, Kleinschmidt I, Pinder M, Scott TW, Tusting LS, Lindsay SW. Evidence-based vector control? Improving the quality of vector control trials. Trends in parasitology. 2015 Aug 1;31(8):380-90.

https://www.sciencedirect.com/science/article/pii/S1471492215000975

-

Bowman LR, Donegan S, McCall PJ. Is dengue vector control deficient in effectiveness or evidence?: Systematic review and meta-analysis. PLoS neglected tropical diseases. 2016 Mar 17;10(3):e0004551.

https://journals.plos.org/plosntds/article?id=10.1371/journal.pntd.0004551

-

Sama W, Killeen G, Smith T. Estimating the duration of Plasmodium falciparum infection from trials of indoor residual spraying. The American journal of tropical medicine and hygiene. 2004 Jun 1;70(6):625-34.

https://pdfs.semanticscholar.org/2ee9/6fcee20bee6ea44f2aff582a8fec0e935cd2.pdf